By: Julio Perera on February 27th, 2024

How to create and use a local Storage Class for SNO (Part 2)

This is the second part of the two-part blog where we are showing the sequence of specific steps, we followed to install a Local Storage Provider to be used for SNO deployments over Bare Metal. To see important considerations and general discussion, feel free to see previous blog entry.

We use our own scripting for all these needs, but in this Blog entry, we are going to describe the actions the scripting takes to provision the Operator, the Storage Cluster and the Storage Class. Including making the Storage Class the default.

All actions to take are assumed using either the web OpenShift Console or a Command Line Interface via “oc”.

First, as per the Operator Documentation, we are going to create a Project / Namespace for it if it doesn’t exist already. Notice that the recommended project for this Operator is always “openshift-storage” as per the Operator documentation in the above URL.

To do so, we can submit the following YAML to the cluster:

apiVersion: v1

kind: Namespace

metadata:

name: "openshift-storage"

annotations:

openshift.io/display-name: "LVM Storage for SNO (Common)"

Notice that we can submit this Project Definition to the Cluster via “oc apply -f <file-name>.yaml” given that the contents of the file are as per above. We can also use the RedHat OpenShift Console by clicking the plus (+) sign on the upper right corner and pasting the contents then pressing <Create>. The “oc apply” command will modify the Project if it already exists or create it if it doesn’t.

Next, we should create an Operator Group to create the Operator afterwards. To do so, we can use the following YAML:

apiVersion: operators.coreos.com/v1

kind: OperatorGroup

metadata:

name: lvms-operator-group

spec:

targetNamespaces:

- openshift-storage

After that, we shall create the Operator Subscription, to do so, we used the following YAML:

apiVersion: operators.coreos.com/v1alpha1

kind: Subscription

metadata:

name: "lvms-operator"

namespace: "openshift-storage"

spec:

channel: "stable-4.12"

installPlanApproval: Automatic

name: lvms-operator

source: redhat-operators

sourceNamespace: openshift-marketplace

Note that we are using an “Automatic” Install Plan Approval setting. In case of not wanting the operator to automatically upgrade in the future (inside the channel), then setting the value to “Manual” should accomplish that, but the generated Install Plan will need to be manually approved afterwards.

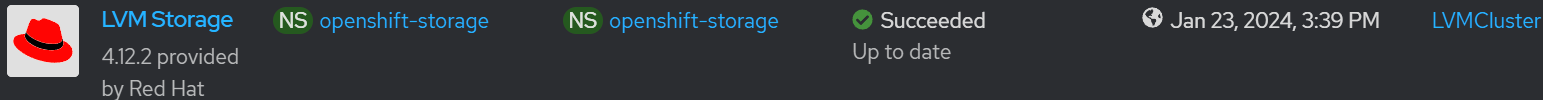

After that, we should wait until the Operator has been installed in the cluster. To check, we can use “oc get csv -n openshift-storage” and look for the value under PHASE which should be “Succeeded” as shown below:

![]()

If using the OpenShift Console, go to Operators -> Installed Operators then look for the “LVM Storage” Operator in the “openshift-storage” Namespace. It should show “Succeeded” as per the below screenshot:

Next, we should submit the definition for the LVM Storage Cluster. To do so, we submitted the following YAML file:

kind: LVMCluster

apiVersion: lvm.topolvm.io/v1alpha1

metadata:

name: "lvms-vg1"

namespace: "openshift-storage"

finalizers:

- lvmcluster.topolvm.io

spec:

storage:

deviceClasses:

- deviceSelector:

paths:

- /dev/sdb

name: vg1

thinPoolConfig:

name: "thin-pool-1"

overprovisionRatio: 10

sizePercent: 90

Notice that we used “/dev/sdb” as the device (which as stated before, is the secondary hard disk on the VM). And an overprovisioning ratio and size percent of “10” and “90”, respectively. These values can be modified after reading the proper considerations on the Operator documentation as per URL presented above.

The operation is going to proceed and after a while, in the YAML for the LVMCluster, the “status” for the “device” should show “Ready”. We can use the following “oc” command to grab it:

“oc get lvmcluster lvms-vg1 -o=jsonpath='{.status.deviceClassStatuses[?(@.name=="vg1")].nodeStatus[0].status}'”

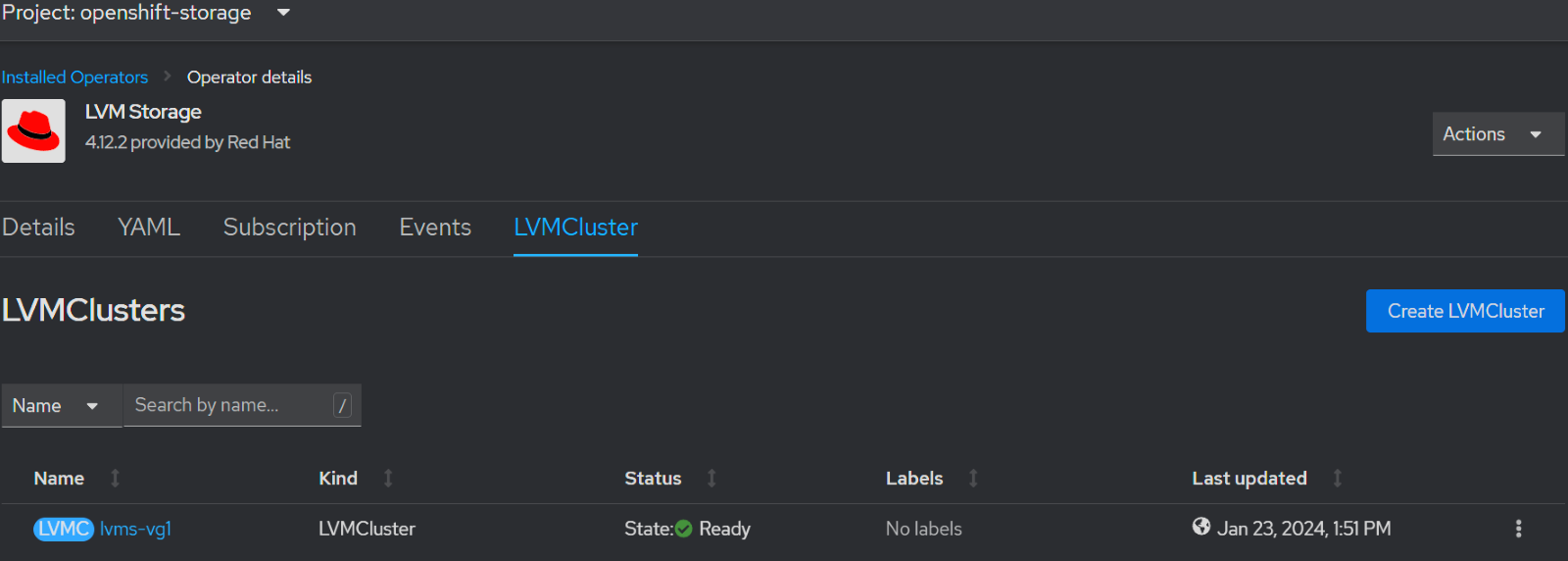

The command should output “Ready” once the LVMCluster is ready as per above. The status can also be checked on the OpenShift Console, and then go to Operators -> Installed Operators then look for the “LVM Storage” Operator in the “openshift-storage” Namespace. Click on it then from the list of tabs in the Operator, change to the “LVMCluster” tab and verify that it shows “State: Ready” under Status as per the below screenshot:

Although, the value under Status that appears on the OpenShift Console above is not the same as per the intended device status, but it should suffice. Otherwise, go to into the LVMCluster details and look directly at the YAML for it for the device status.

Be patient, as it may take a while for the device to be “Ready” and the LVMCluster may show “Failed” at times.

However, should problems arise, go to the Pods on the “openshift-storage” Namespace, and watch the logs under the “controller” or “node” Pods for errors that may explain what may be happening.

As a result of the LVM Storage Cluster being created, a Storage Class should have been created but we would like to change its “volumeBindingMode” to “Immediate” instead of the default of “WaitForFirstConsumer”. However, the “volumeBindingMode” value is not modifiable once the Storage Class is created as the parameter is immutable.

To work around that, we shall drop and recreate the Storage Class with the same values, except modifying the “volumeBindingMode” and we should also set it as the default Storage Class. To do so, we should copy the current definition, delete the Storage Class and then re-add it with the following modifications:

Add “storageclass.kubernetes.io/is-default-class: 'true'” under “metadata” to make it the default Storage Class.

Modify the “volumeBindingMode” to “Immediate”. Which will allow immediate testing of the Provisioner by creating a Persistent Volume Claim and waiting for the Persistent Volume to create and bind to it.

See below for how it looked in our case:

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: lvms-vg1

annotations:

description: Provides RWO and RWOP Filesystem & Block volumes

storageclass.kubernetes.io/is-default-class: 'true'

provisioner: topolvm.io

parameters:

csi.storage.k8s.io/fstype: xfs

topolvm.io/device-class: vg1

reclaimPolicy: Delete

allowVolumeExpansion: true

volumeBindingMode: Immediate

Notice that we also used a “reclaimPolicy” of “Delete”. If there are concerns with that, we could use “Retain” instead but then we should manually delete any outstanding Persistent Volumes that will remain after the associated Persistent Volume Claim has been deleted.

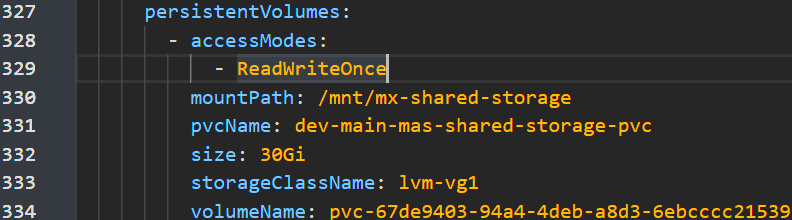

As final comments, if using a Persistent Volume approach for the Maximo Application Suite Manage application to store DOCLINKS or other files, then ensure the related ManageWorkspace YAML is modified accordingly and the value of “accessModes” under “persistentVolumes” is “ReadWriteOnce” as per the Storage Class. See below for a screenshot:

This should help with newer versions of Manage application, as older versions have a bug in the “entitymgr-ws” Pod that doesn’t pick the above definition correctly.

Other places where the Access Mode should need to be modified to “ReadWriteOnce” are:

- On the Image Registry Storage PVC when using a Bare Metal approach and attaching storage to the default Image Registry. We are going to cover that case in our next blog entry.

- On the DB2 Cluster definition when creating a local (in-cluster) DB2 instance. In the “db2uCluster” Custom Resource under “spec.storage[*].spec.accessModes” array.